The AI Operations Stack for Business

Authored By:

Sairis

Orchestrated By:

Brad Stutzman

Release Date:

November 20, 2025

Refreshed Date:

11/20/25

15 MIN READ

ABSTRACT

The AI Operations Stack redefines enterprise AI investment priorities through a unified framework that operationalizes AI at scale. Rather than viewing AI as a technology to bolt onto existing systems, the Stack establishes three strategic layers—AI Governance, Company Context Management, and AI Orchestration—that transform how organizations deploy, govern, and derive value from AI. By centralizing governance policies, activating company-specific knowledge within workflows, and enabling distributed employee-guided orchestration of AI agents, organizations achieve 3x faster time to productivity, 50% reduction in AI support costs, and up to 97% faster ROI realization compared to fragmented deployments. This framework shifts the competitive advantage from access to frontier models (now commoditized) to operational maturity: organizations with mediocre models but excellent governance, knowledge integration, and orchestration capabilities outperform those with frontier models but no operational infrastructure. The result is AI that delivers measurable business outcomes rather than pilot purgatory—transforming employees from task executors to AI directors orchestrating intelligent workflows, and enabling companies to capture exponential productivity gains while maintaining control and compliance across enterprise-wide AI operations.

Download White Paper PDF

EXECUTIVE SUMMARY

The AI Paradox: High Adoption, Uneven Impact

The enterprise AI transformation is accelerating at an unprecedented pace. Adoption is high — over 40% of organizations have attempted to deploy AI across their workforce. Yet beneath the surface lies a paradox: despite $30-40 billion invested in generative AI, 95% of enterprise AI pilots fail to deliver value. The gap between experimentation and value reveals a painful truth: adoption is not the bottleneck. Operationalization is. With the right technology stack, companies can move from the AI theater prevailing in executive culture and to operational value that’s truly exponential.

Why Enterprise AI Initiatives Fail

Governance Gap: 63% of organizations lack AI governance policies, leaving shadow AI unchecked. The consequences are severe: 97% of AI-related security breaches involve systems without proper access controls. Without centralized governance, AI use remains an unmanageable compliance and security risk.

Context Blindness: Generic AI models lack understanding of your company's unique knowledge, data, processes, and business context. No matter how strong your data centralization strategy is, AI remains surface-level unless paired with organizational knowledge. AI that doesn't know your company can't truly serve it.

Operational Misalignment: Without a coherent operational framework, AI remains siloed. IT becomes a bottleneck rather than an enabler. Business units lack autonomy to solve problems. Internal AI builds succeed only 33% of the time compared to 67% for vendor partnerships — suggesting the problem isn't lack of talent, but lack of operational infrastructure.

The Vision

The traditional enterprise approach to AI is fragmented — Departments buy and use AI tools independently. The result is shadow sprawl, inconsistent outcomes, and governance chaos. An AI-First Operation centralizes AI through a unified platform, creating:

Unified governance — one set of policies, access controls, and compliance standards applied enterprise-wide

Shared company context — all AI agents access centrally managed knowledge and data, ensuring consistency and accuracy while maintaining corporate data security

Reusable processes — workflows built in one department can be adapted and reused by others, accelerating innovation

Centralized visibility — IT and leadership gain full transparency into all AI operations: which agents run, who uses them, what outcomes they deliver, and what compliance risks exist

Single interface for employees — one unified AI assistant that adapts to role, department, and task

Outcomes

The result: organizations scale AI faster, with lower risk, higher ROI, and consistent employee experiences across all workflows. IT maintains control without becoming a bottleneck. This results in:

3x faster time to productivity compared to ad-hoc AI deployments

50%+ reduction in AI-related support costs through centralized governance

Up to 97% faster ROI realization when orchestration is managed by departments rather than IT

Quantifiable productivity gains (hours saved, decisions accelerated, processes automated)

The competitive imperative is clear:

Organizations that prioritize strategic operational AI infrastructure now will capture disproportionate value. Those that delay will struggle to convert AI investments into business outcomes.

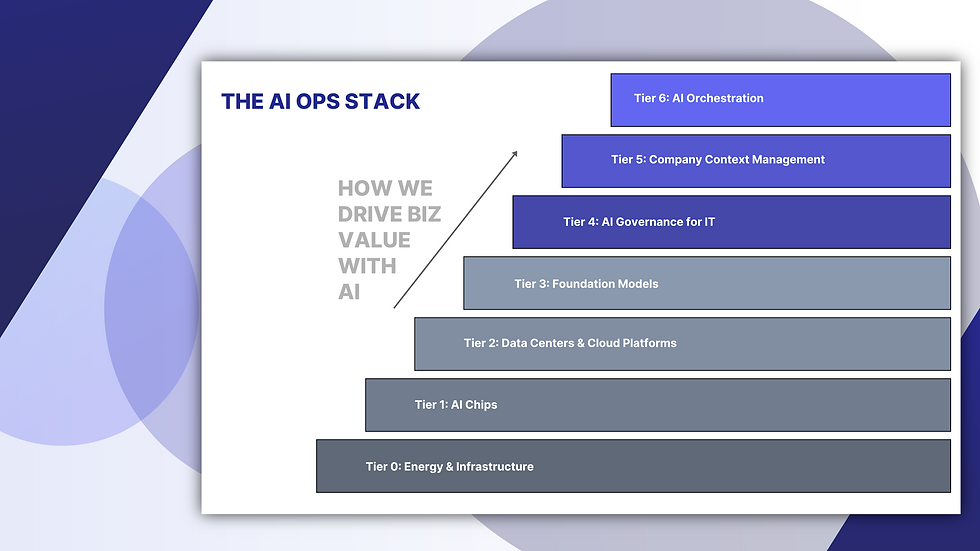

Introducing the AI Operations Stack

This white paper presents a new framework: the AI Operations Stack — a seven-tier model that redefines how organizations should think about and invest in enterprise AI. The stack elevates governance, knowledge, and orchestration to their proper strategic importance, moving the focus away from infrastructure wars toward the control layers that actually drive value.

Table of Contents

Executive Summary

The State of AI Adoption in Business

The Classic Enterprise Technology Chain

The Perceived AI Chain

Why This is a False Perception

The Emerging AI Operations Stack

The Place of Foundation Models

AI Governance for IT

Infusing Company Context Into AI

Empowering Employees to be AI Orchestrators

Defining AI-Driven Company Operations

An Organizational Operating Model

Scaling Use Cases, Realizing Value

1. THE STATE OF AI ADOPTION IN BUSINESS

Why AI Transformation has Stalled

The enterprise AI story is a paradox. Adoption is accelerating, but transformation is stalling. Organizations are investing heavily in generative AI — $30-40 billion across enterprise initiatives — yet 95% of corporate AI pilots deliver zero measurable ROI. Only 5% of custom enterprise AI tools successfully reach production with impact.

The reasons for this collapse are structural, not technical. Four problems dominate enterprise AI failures:

1. Silos Block Enterprise Coordination

Departments operate independently, adopting AI tools without connecting them to enterprise strategy. According to Harvard Business Review's September 2025 analysis, AI is reinforcing organizational silos rather than breaking them down.

The pattern is familiar: the sales team deploys AI for lead scoring. The customer service team implements chatbots independently. The operations team automates procurement processes. Each team sees localized efficiency gains, yet the organization fails to deliver on its overall strategy. A bank we cited earlier exemplifies this: the risk management team's AI (built on credit scores) flagged a customer segment as high-risk, while the marketing team's AI (analyzing digital behavior) identified the same segment as prime acquisition targets. The two systems generated opposing conclusions — a direct threat to business strategy.

Departments lack incentives to collaborate. When performance metrics are function-specific — sales chases revenue, HR tracks engagement, operations pursues efficiency — AI systems optimize for their narrow domain rather than for enterprise outcomes.

2. Company Context Integration Failures

Generic foundation models have no knowledge of your company. When deployed without company context, they hallucinate, generate inaccurate recommendations, and erode user trust. Yet most enterprises treat knowledge management as an afterthought rather than a strategic layer.

Users quickly recognize this limitation. According to MIT's 2025 research, the same professionals who use ChatGPT daily for personal work abandon enterprise tools because those tools lack memory, fail to retain feedback, and require full context input on every interaction. Ninety percent of users prefer humans for high-stakes, multi-step work — not because AI can't reason, but because it can't adapt to company specific context.

3. Governance Gaps Create Compliance Risk

The governance problem is severe. 63% of organizations lack AI governance policies, leaving systems unmonitored and uncontrolled. The consequences are stark: 97% of AI-related security breaches involve systems without proper access controls.

Beyond security, the absence of governance prevents consistent operations. When departments deploy AI independently, there's no unified policy on which models are approved, which data is accessible, or how compliance is documented. The result: scattered risk, inconsistent brand voice, and regulatory exposure.

4. Operational Misalignment

Even when AI projects are technically sound, they often solve the wrong problems. MIT's 2025 analysis reveals that 50-70% of AI budgets flow to sales and marketing — not because these functions deliver the highest ROI, but because their outcomes are easiest to measure and explain to leadership.

Meanwhile, the real value lies in ignored functions. Back-office automation — procurement, finance, operations — delivers measurable cost savings: $2-10M annually in BPO elimination, 30% reduction in agency spend, and $1M+ in outsourced risk management savings. Yet these functions receive minimal AI investment because their value is harder to quantify and slower to surface in executive conversations.

Organizations are investing in visible problems rather than high-ROI opportunities, a bias that compounds over time.

The Shift from AI Hype to AI Operations

The market is moving decisively beyond "Can we build AI?" toward "How do we operationalize AI responsibly and at scale?”

Enterprises now need an architecture that unites governance, knowledge management, and orchestration — not just access to LLMs. The competitive edge belongs to organizations that can:

Control AI deployment through unified governance policies, ensuring every interaction is auditable, compliant, and aligned with organizational standards

Activate company-specific knowledge within AI workflows so every response is accurate, contextualized, and branded

Enable distributed AI management where business teams (not just IT) design and deploy AI agents, accelerating innovation and reducing IT bottlenecks

Monitor, audit, and continuously improve AI outcomes, measuring impact against business metrics rather than software benchmarks

This is the shift from "AI theater" (visible projects, limited value) to "AI operations" (integrated systems, measurable ROI).

The Shadow AI Economy: The Gap Between Policy and Reality

Here's a telling statistic: while only 40% of companies have purchased official LLM subscriptions, employees at over 90% of organizations regularly use personal AI tools for work.

Employees are crossing the GenAI divide unofficially — using ChatGPT, Claude, Gemini on personal accounts to automate significant portions of their jobs, often without IT approval or knowledge. In many cases, shadow AI users report gains multiple times per day, while their company's official AI initiatives stall in pilot phase.

This shadow economy demonstrates a critical insight: individuals can successfully operationalize AI when given flexible, responsive tools. Organizations that fail to harness this trend or, worse, prohibit it, are leaving productivity on the table while opening themselves to uncontrolled governance risk.

Forward-thinking organizations are beginning to bridge this gap by learning from shadow usage, analyzing which personal tools deliver value, then procuring enterprise alternatives that maintain the flexibility employees demand while adding security and governance controls.

Why AI Transformation has Stalled

The GenAI divide is clearest when examining deployment rates:

Generic LLMs (ChatGPT, Copilot): 80% explored, ~40% deployed

Custom enterprise tools: 60% evaluated, 20% piloted, 5% reached production

This steep drop-off reveals the core problem: custom and embedded AI tools fail due to three barriers:

Brittle workflows — tools don't adapt when processes change

Lack of contextual learning — systems don't improve from feedback or retain memory

Misalignment with operations — solutions don't integrate into day-to-day work

Mid-market firms move decisively faster than enterprises. Top performers report 90-day timelines from pilot to full implementation; enterprises take 9+ months. The difference isn't budget or resources — it's organizational structure. Mid-market companies decentralize implementation authority while retaining accountability; enterprises maintain central control, slowing decisions and compounding misalignment.

Investment Bias Perpetuates Failure

The allocation of AI investment reflects the divide in action. Executives allocate roughly 50-70% of AI budgets to sales and marketing, driven by:

Measurement clarity — Demo volume and email response time map directly to board-level KPIs

Visibility — Sales wins are publicly celebrated; operational gains are quieter

Trust bias — Procurement leaders gravitate toward established vendors and peer referrals, not toward startups pitching novel use cases

This investment bias perpetuates failure by directing resources toward visible but often less transformative use cases, while the highest-ROI opportunities — back-office automation, compliance monitoring, knowledge integration — remain underfunded.

Key Takeaways

The research is unambiguous: companies with high modernization maturity that prioritize governance and compliance achieve twice the performance improvements of laggards. Governance isn't paperwork — it's a catalyst for resilience and ROI.

Yet only a small cohort of "Gen AI high performers" have translated adoption into material returns, attributing over 10% of their EBIT to Gen AI solutions.

2. UNDERSTANDING THE AI VALUE CHAIN

The Classic Enterprise Technology Chain

To understand why enterprises struggle with AI adoption, it helps to first understand how enterprises have successfully adopted technology for decades.

The classic enterprise technology value chain has always worked like this:

The critical insight: Enterprise technology value has always lived in the upper tiers. Organizations didn't win competitive advantage by owning better servers or faster networks. They won by implementing better business applications that solved real problems.

CIOs understood this intuitively. They managed infrastructure as a cost center (keep it running, minimize spend), but invested strategically in applications that touched business processes. The $50 million ERP implementation wasn't about the database — it was about redesigning workflows, standardizing processes, and creating measurable business value.

Purpose of this framework: Understanding where value actually sits in a technology stack determines where you invest, how you measure success, and ultimately whether you win or lose in the market.

The Perceived AI Chain

When generative AI exploded in 2023-2024, business leaders assumed the value chain would work the same way it always had. They expected that AI would slot into the existing enterprise technology stack just like every other technology before it.

The assumption: This structure mirrors the Classic Enterprise Technology Chain perfectly. AI would be procured like any enterprise software. Organizations would buy Salesforce Einstein, embed Copilot in Office 365, integrate ChatGPT into ServiceNow. Departments would use these AI-enhanced applications just as they'd adopted CRM or ERP, or other business applications and point solutions in the past.

Vendors scrambled to defend their revenue base by bolting on Chatbots.

IT leaders went further. They began losing systematic management control over company AI. Their only remedy was through company policy establishment: "Use only approved tools. ChatGPT is prohibited without significant use policy and security review.”

This assumption was deeply reasonable. But it was also profoundly wrong.

Neither strategy worked.

Why the Perceived AI Chain Fails: The Control Problem

Here's what actually happened:

Tier 6 functioned as expected. Organizations purchased enterprise AI tools from established vendors, and these were controlled, audited, and integrated into existing systems.

But then Tier 7 emerged—completely outside the perceived stack. Employees immediately adopted third-party AI tools outside corporate control. ChatGPT Plus subscriptions proliferated. Claude became the default for coding. Perplexity became the research engine. By mid-2025, 90% of employees at organizations with AI policies were regularly using unapproved personal AI tools for work.

IT's response was predictable policy: "Don't use ChatGPT without approval."

Employee response was equally predictable: Ignore IT.

Why? Because ChatGPT solves problems faster and better than anything IT controls. Salespeople paste customer emails into ChatGPT to draft responses. Engineers use Claude for code generation. Analysts use Perplexity for research. The tools work. They're free. They're instantly available.

Policies can't compete with utility and immediacy.

The critical realization: Vendor-centric purchasing strategies (Tiers 0-6) are insufficient because the real adoption (Tier 7) happens outside IT's technical control. Rather than managing a controlled, orchestrated set of tools, IT now manages a shadow economy of unapproved AI usage it can't see, monitor, or govern.

Why Bolt-On Solutions Fail: The Data Architecture Problem

Even if IT could prevent shadow AI adoption, the Perceived Stack has a deeper architectural flaw: traditional enterprise data architecture is incompatible with how LLMs actually work.

Traditional enterprise systems (ERP, CRM, SharePoint, ECM) organize data for human navigation—they use keyword indexing. LLMs cannot work reliably with this architecture.

The Incompatibility

LLMs fail on structured keyword search. Ask ChatGPT embedded in your CRM "What's our Q4 revenue?" and it guesses rather than retrieves—hallucinating plausible-sounding numbers instead of facts. LLMs excel at reasoning about fuzzy, contextual information. They fail at precision queries requiring exact data retrieval.

Traditional keyword search fails on unstructured reasoning. Searching for "refund policy" in SharePoint returns 47 documents containing those keywords. Humans manually synthesize the results. But LLMs struggle with ambiguous, incomplete information—so they hallucinate.

The Solution: Vectorization

LLMs excel when data is vectorized—converted into semantic embeddings that capture meaning, not just keywords. When a company's knowledge is vectorized, semantic search finds related documents, and LLMs reason about actual company knowledge rather than making guesses.

Vectorized search: "What's our refund policy?" finds semantically relevant documents (not keyword matches), and LLMs reason about actual company knowledge — not guesses.

Why Legacy Systems Cannot Be Patched

SharePoint + ChatGPT still returns keyword results. ECM + Copilot still uses traditional search. The data model is incompatible. Bolting AI onto these systems fails because LLMs receive ambiguous, incomplete information.

Organizations cannot solve this by upgrading SharePoint or adding AI to traditional content management platforms. The architecture is wrong. The data model is wrong. The search mechanism is wrong.

The only solution is to build an AI-native knowledge layer above legacy systems that:

Ingest company documents and intelligence, extract all unstructured data

Vectorize all unstructured content into semantic embeddings

Authorize access to vectorized intelligence to the appropriate groups of users in the organization

Orchestrate AI agents that reason with both vectorized knowledge and structured data

Govern all interactions with access control, audit trails, and compliance policies

Organizations attempting to patch legacy systems will continue to experience AI failures. Those building AI-native knowledge layers will capture reliable, scalable, measurable AI value.

This is the operational layer that makes AI work reliably — and it's precisely what the AI Operations Stack provides in Tiers 5-6 (Knowledge + Orchestration). The Perceived Stack assumes you can just add AI to Tier 6 and have it work. The reality is that Tiers 4-6 need fundamental reorganization around AI-native principles.

This realization extends beyond Sairis. Major cloud providers—AWS, Microsoft Azure, and Google Cloud—have invested heavily in AI infrastructure and services. Yet, despite offering vectorization, RAG frameworks, knowledge base tools, and orchestration components, enterprise AI success rates remain flat.

The fundamental issue: These providers sell components, not systems. Organizations attempting to assemble a coherent AI stack from individual cloud services face two barriers:

Architectural Fragmentation — Each tool solves one piece independently. Knowledge bases don't automatically connect to governance policies. Orchestration workflows operate independently of context management. Result: Siloed solutions that don't scale.

Hidden Total Cost of Ownership — A single knowledge base for vectorized data can cost $1,200+/year in cloud hosting. Add architectural design (hiring engineers), ongoing management, and security integration. Each departmental use case becomes a separate project, siloed from others. Organizations end up building 5-10 independent systems when they should have built one unified platform.

This is why companies remain stuck at 5% success despite massive cloud investments. The stack isn't broken because the tools are bad. It's broken because the approach is still fundamentally fragmented.

The stack isn't broken because the tools are bad. It's broken because the approach is fundamentally fragmented.

3. THE EVOLVED AI OPERATIONS STACK

A Framework for Operationalizing AI

The previous section revealed why the Perceived AI Chain fails: control is lost, bolt-ons don't work, and data models are incompatible. Organizations need a different framework entirely.

The AI Operations Stack is a six-tier model that repositions enterprise AI around the layers that actually drive value: governance, knowledge management, and orchestration. Unlike the Perceived Chain (which assumes purchasing power and policy solve the problem), the Operations Stack assumes organizational maturity and architectural design are the real differentiators.

This framework answers a fundamental question:

How do enterprises build reliable, scalable, governed AI that drives measurable business outcomes?

Stack Tier Breakdowns

For purposes of this white paper, Tier 0, Tier 1, and Tier 3 are briefly covered but largely fall out of relevance for understanding how to operationalize AI inside a company.

Tier 0: Energy Infrastructure

The foundation of all computation. Power, cooling, and sustainability underpin every AI workload. Critical but fully commoditized.

Tier 1: AI Chips

Physical processing units (GPUs, TPUs, ASICs) that execute compute. Rapid innovation continues, but access is democratized and costs decline 30% annually. Commodity layer.

Tier 2: Cloud Platforms

Cloud Platforms provide the scalable compute environments and global infrastructure necessary to run and expand enterprise AI workloads reliably. While their role is foundational for performance and reach, their relevance to AI operations is primarily about scalability, elasticity, and worldwide accessibility—not day-to-day business operations.

IT departments should carefully evaluate their AI providers’ cloud architecture choices, asking which regions, data centers, and redundancy models are used, how data is isolated, and whether the provider can guarantee data residency or portability. They must also understand the shared responsibility model—where the cloud platform secures the infrastructure, but the AI provider and customer remain responsible for application-level compliance and data handling. For regulated industries, this means verifying alignment with frameworks such as FedRAMP, HIPAA, and SOC 2, ensuring every layer of the AI stack—from compute to orchestration—meets enterprise and government compliance requirements.

A growing option for highly regulated or security-sensitive organizations is a customer-hosted SaaS or dedicated cloud instance model, where the AI provider deploys the platform directly into the customer’s own cloud (such as AWS or Azure). This approach offers the data isolation and sovereignty benefits of on-premise infrastructure while keeping the software current through the provider’s managed CI/CD pipeline. For industries bound by frameworks like FedRAMP, HIPAA, or ITAR, this hybrid deployment pattern ensures compliance without sacrificing modernization, uptime, or vendor innovation velocity.

Tier 3: Foundation Models

Large language models from OpenAI, Anthropic, Google, etc...

Large-scale language and multimodal models built by OpenAI, Anthropic, Google, Meta, and others. Performance gaps between frontier and open-weight models are narrowing. Access is democratized. Model capability is increasingly commodity.

What Are Foundation Models?

Foundation models are the raw AI engines — the neural networks trained on vast amounts of text and images that can predict the next token in a sequence. They're the "thinking" layer: the ability to understand language, reason about concepts, and generate coherent text.

These models are the engine. They're not complete products. They're the raw capability that everything else in the stack builds on top of.

Understanding the Difference: Models vs. Applications

Here's where confusion proliferates. Most people conflate the model with the application. This is a critical distinction. When you use ChatGPT, you're not just accessing the model. You're using OpenAI's proprietary application layer built around the model.

ChatGPT

(The Application)

When you visit chatgpt.com and talk to ChatGPT, you're using an application built on top of OpenAI's GPT models.

ChatGPT (the app) includes:

The GPT model (the engine)

PLUS a web interface for easy access

PLUS memory of your conversation history

PLUS integration with plugins and tools

PLUS OpenAI's cloud infrastructure

PLUS OpenAI's data retention and privacy policies

VS.

ChatGPT 5

(The Model)

Developers and enterprises can also access the raw GPT-4 model through OpenAI's API. This is Tier 3 — direct access to the foundation model.

Using the GPT-5 API includes:

The GPT-5 model (the engine)

No web interface (you build your own)

No persistent memory (you manage conversation state)

No plugins (you integrate your own tools)

Pay-per-token pricing

Your data runs on OpenAI's infrastructure (with specific data policies)

The Strategic Implication: Models Are Commodity, Applications Are Strategy

This distinction matters enormously.

Model access is now fully commoditized:

Every organization can access GPT-4, Claude, Gemini at the same price

Inference costs have dropped 280-fold in two years

Open-weight alternatives (Llama, Mistral) provide comparable performance at near-zero cost

Competitive advantage through "better model access" has collapsed

Application differentiation is where value lives:

ChatGPT's value proposition is ease-of-use and conversation memory

Copilot's value proposition is integration with Office 365

Claude's value proposition is extended context windows and instruction-following

But none of these applications solve the enterprise problems we discussed earlier: governance, knowledge integration, orchestration, or measurable ROI

Organizations that treat Tier 3 models as their competitive advantage will lose. Organizations that treat Tier 3 as table-stakes and compete through strategic application of governance (Tier 4), knowledge (Tier 5), and orchestration (Tier 6) will win.

The Confusion in Practice: Why Companies Get Lost Here

Many enterprises make a strategic error: they assume that buying access to a better model (GPT-4 instead of GPT-3.5, or Claude instead of Gemini) will solve their AI adoption challenges.

It won't.

The problem is not model quality. The problems are:

Lack of governance (Tier 4) — leading to shadow AI and compliance risk

Lack of company context (Tier 5) — leading to hallucinations and inaccuracy

Lack of orchestration (Tier 6) — leading to AI remaining a one-off assistant rather than a productivity engine

An enterprise with mediocre models but excellent governance, knowledge, and orchestration will outperform an enterprise with frontier models but no operational infrastructure.

An enterprise with mediocre models but excellent governance, knowledge, and orchestration will outperform an enterprise with frontier models but no operational infrastructure.

Tier 4: AI Governance for IT

AI guardrail controls, compliance monitoring, and cost management

This is where organizational control begins. Governance is the first enterprise-managed layer and the foundation for everything above it.

What It Encompasses

Policy definition and enforcement — Which models are approved? Which data is accessible? What behaviors are allowed?

Access control — Attribute-based and role-based permissions ensure users only interact with authorized knowledge and models

Compliance monitoring — Every interaction is logged, auditable, and compliant with regulatory requirements

Cost management — Token utilization is visible, predictable, and controlled in real-time

Brand consistency — All AI interactions align with organizational standards and guardrails

Why It's Strategic

Without governance, AI is a security and compliance liability. With it, AI becomes a controlled, trustworthy asset that IT can confidently scale enterprise-wide.

The governance paradox: Many IT teams assume governance means

"slow down AI adoption." In reality, operationalized governance

accelerates adoption because it eliminates fear.

When IT can definitively say "yes" while maintaining control, departments embrace AI rather than hide it in shadow tools.

Critical Governance Capabilities

Model control: IT selects which LLMs are available to which users

Data access management: Fine-grained permissions determine which knowledge each user can access

Prompt governance: Department leaders establish consistent interaction patterns within their teams

Compliance monitoring: Audit trails, usage reports, and outcome tracking enable regulatory compliance

Token tracking: Real-time visibility into AI usage costs and spending

Tier 5: Company Context Management

Company intelligence that evolves over time

This is where organizational efficiency begins. The lack of Company Context Management is where many company AI initiatives fail, and why over 70% of employees claim that using ChatGPT and Microsoft Copilot actually reduce productivity.

Why Context Is Critical in the Age of Agentic AI

Foundation models are powerful but contextually blind. They don’t have any custom or company specific information inherently built in to look up or reason to solve problems based on a company’s unique landscape, they have no knowledge of who your employees are, and especially don’t know what each individual employee is allowed to access, and they don’t understand each company’s culture, processes, best practices, or how to think and solve problems in the ways that company employees have already solved for or iterated through. Said another way, AI is like having a smart employee who forgets everything at the end of the day and each day, each employee has to manually provide it information they need it to know in order to be helpful for them, which could take hours away from productivity every day. Companies would never maintain a human staff member who has to go through new employee onboarding and role training from scratch every single day, and they shouldn’t have to put up with it in their AI Assistants either. This is a major source of AI hallucinations, AI that doesn’t understand proper context but generates plausible-sounding but factually incorrect information that erodes user trust and creates liability for the company.

In the age of agentic AI — where agents autonomously research, reason, and take action — context becomes existential. An agent without company context cannot:

Apply business logic accurately (pricing rules, commission structures, compliance requirements)

Access customer or product information reliably

Synthesize across multiple data sources to make informed decisions

Operate within brand and policy guardrails

Generate measurable, auditable outcomes

Agentic AI without context is AI without judgment. It's dangerous.

Context transforms agentic AI from a liability into an asset. When agents have access to comprehensive, accurate, governed company knowledge and data, they become reliable productivity multipliers. But this has to be managed at the enterprise level, not just the individual.

AI without enterprise level context management is like hiring an employee who forgets everything the next day and has to be re-taught all company processes, information, context, etc… it does not scale.

Why Enterprise Control Over Context Matters

Context management is not just a technical layer, it’s a strategic asset. Here's why enterprises must control it centrally:

Accuracy and Consistency: When context is fragmented across departments, AI agents receive contradictory information. One agent accesses pricing from Finance; another accesses an outdated price list in a shared folder. Inconsistency erodes trust and creates operational chaos.

Compliance and Governance: Regulatory requirements (HIPAA, SOX, GDPR) demand that organizations control data access. If context is stored across dozens of systems without unified governance, compliance becomes impossible to demonstrate and audit.

Security: Distributed context across unsecured systems exposes corporate knowledge to unauthorized access, external compromise, and training data leakage. Centralized context management with security controls prevents this.

Operational Efficiency: When context is managed centrally, updates propagate instantly across all AI agents. When HR updates benefits, all HR agents immediately reflect the change. Decentralized context means manual updates, inconsistency, and wasted employee time.

Preservation of IT Time: Without centralized context management, IT becomes the bottleneck. Every business unit requests custom data connections, APIs, and integrations. IT drowns. Centralized context management with self-service publishing frees IT to focus on infrastructure and governance, not context curation.

Major Categories of Company Context Under Management

There are three major categories of Company Context needed for effective operationalization across a company. What an employee is allowed to access is largely managed at the AI Governance level, or Tier 4. Additionally, Company processes are largely managed at Tier 6 and will be broken down in that section. At Tier 5 we’re focusing on how the AI knows the company’s specific information and data - through an AI Intelligence hub.

Managed in Tier 4

Managed in Tier 5

Managed in Tier 6

Company Intelligence

Imagine a brand new employee starting on their first day. They don’t understand the company culture, values, history, or how things are done. It takes time to onboard them. Now contrast that against an employee that knows everything about the company, who everyone is, what the history is, why things are the way they are, and can drop in to any meeting, or support any individual working on a task or trying to solve a problem and brings all that knowledge into the process. This is the difference between having AI built on top of Company Intelligence with context management controls, and generic AI that comes out of the box with ChatGPT or Microsoft Copilot. To understand the needs here, you must start by compartmentalizing two different types of information, unstructured data and structured data.

Knowledge Management: Unstructured Context

Knowledge management encompasses the organization's unstructured intelligence — policies, procedures, FAQs, training materials, institutional memory — transformed into AI-ready context.

The Vectorization Imperative

For agentic AI to work reliably with unstructured knowledge, that knowledge must be vectorized — converted into semantic embeddings that capture meaning, not just keywords.

Traditional keyword search:

User: "What's our return policy?"

Result: 47 documents containing "return" or "policy"

User must manually synthesize results

AI receives ambiguous information and hallucinates

Vectorized semantic search:

Query: "What's our return policy?"

Result: 5 semantically relevant documents, ranked by relevance

AI receives clear, contextualized information

Agent applies business logic reliably

How it works: Documents are converted into high-dimensional vectors (embeddings) that capture semantic meaning. "The customer returned the defective product" and "A faulty item was sent back by the buyer" produce similar vectors because they mean the same thing. Traditional keyword search would miss the second document entirely.

When agents search vectorized knowledge, they find semantically relevant documents — not keyword matches. This enables reliable, contextually grounded agentic AI.

Why "Just Connect to SharePoint” or “Google Drive” Fails

Many organizations assume the solution is simple: "Connect our LLMs to SharePoint and let employees search company documents."

The core problem: SharePoint was designed for human navigation of documents. Simply connecting to Sharepoint is simply providing a keyword search. Companies are just simply bolting on a new form of technology to old data structures that don’t speak the language of the new technology. Shared drives were not designed to feed agentic AI with accurate, contextualized, governed information. Bolting AI onto SharePoint or Google Drive increases hallucination risks, and assumes your shared drive is perfectly clean, managed, and always up-to-date - a notion all employees laugh at. True AI Knowledge Management needs net-new abilities.

Knowledge Management Capabilities

To scale the management of AI knowledge across the organization, it’s critical to look for the following capabilities in your AI Knowledge Management software.

100+ file types supported — Documents, videos, spreadsheets, databases, presentations, anything your organization uses

Automatic vectorization — Files are instantly converted to semantic embeddings upon upload

Dynamic chunking — Content is intelligently segmented for optimal retrieval and context window sizing

Unlimited knowledge bases — Organize by department, topic, or any logical grouping

Always-current — Knowledge is synchronized with agents in real-time

Multi-language support — Knowledge automatically translates for each user's language preference regardless of the source intelligence language

One-click management — Adding, updating, or removing files propagates instantly across all AI agents in order to empower non-technical knowledge owners in the organization the ability to self-manage their knowledge of responsibility.

Self-Curation: Empowering Subject Matter Experts

Knowledge management is not a one-time project — it's a continuous practice. Subject Matter Experts and business leaders self-curate context in their domains:

Add new policies and procedures as the business evolves

Update existing content when processes change

Archive outdated information to maintain accuracy

Monitor which knowledge is actually used by agents

Provide feedback that improves knowledge quality and relevance

Result: Knowledge stays current and accurate.

Example Use Cases: When HR updates benefits, that update is immediately available to HR agents. When Sales launches a new product, that information flows into Sales agents instantly. The intelligence employee’s AI Assistants retrieve should be adaptable and evolving. SMEs own their domain knowledge; IT maintains governance and infrastructure.

Data Management: Structured Context

Data management encompasses the organization's structured intelligence — customer databases, product catalogs, pricing tables, inventory systems, financial data — made accessible and actionable for agentic AI.

The Structured Data Challenge: LLMs Cannot Query Databases

This is critical to understand: Large language models cannot reliably query databases or execute business logic.

When an agent needs to answer "What's our Q4 revenue target?", it cannot:

Execute a SQL query to retrieve the data

Apply business logic to determine what "revenue target" means in context

Distinguish between budget vs. actual vs. forecast

Guarantee accuracy

Instead, the model generates a plausible-sounding answer based on training data patterns — essentially guessing. "Your Q4 target is likely $50-75M." This is useless for business operations. This is a common type of hallucination business users see.

The solution: Advanced AI toolsets that are part of the agentic orchestration layer. These toolsets act as translators between agentic AI and structured data systems:

Agent requests information: "Get our Q4 revenue target from the Finance database"

AI toolset translates: Converts the agent's natural language request into a safe, governed database query

Toolset executes: Queries the database (or API) with appropriate security and access controls

Result returned: Agent receives accurate, auditable data — not a guess

Agent applies: Agent uses the structured data in its reasoning and recommendations

This approach ensures accuracy, auditability, and security. The AI never directly accesses the database; it works through governed translation layers.

CRITICAL ALERT:

Large language models cannot reliably query databases or execute business logic.

THE NEED:

Agentic tools that understand structured data on ingest or connects to 3rd party database via MCP, then analyzes structured data in the Agentic workflows.

Native Data Storage or External Connections

Your company’s AI orchestration platform must support two approaches:

1. Native Data Storage — Organizations can store structured data natively within the AI platform:

Customer profiles and account hierarchies

Product catalogs and pricing tables

Policy and procedure reference data

Historical transaction data needed for context

Native storage provides fast access, tight integration, and simplified governance.

2. External Connections — Organizations can connect to existing business systems:

CRM systems (Salesforce, HubSpot)

BI platforms (Tableau, Power BI)

Data warehouses (Snowflake, BigQuery)

ERP systems (SAP, NetSuite)

Custom databases and APIs

External connections preserve existing investments while enabling agentic AI to access real-time business data.

Model Context Protocol (MCP): The Next Generation of API

As agentic AI scales, a new API standard is emerging: Model Context Protocol (MCP). This is critical infrastructure for connecting AI agents to business systems reliably and safely.

What is MCP?

MCP is an open standard that enables AI models and agents to interact with external systems — databases, APIs, business applications — through a standardized interface. Instead of each AI platform building custom integrations to Salesforce, the MCP standard says: "Salesforce exposes an MCP interface, and any AI platform can connect through it.”

Why MCP matters:

Interoperability — Any AI platform can connect to any business system that implements MCP

Security — MCP includes built-in authentication, authorization, and audit logging

Governance — Organizations control what data AI agents can access through MCP policies

Simplicity — Developers don't build custom integrations for each system; they implement MCP once

Strategic implication: Organizations should prioritize MCP-compatible integrations when connecting structured data systems to agentic AI platforms. MCP will become the industry standard for AI-to-business-system connections.

Strategic recommendation: Begin including support for MCP in all vendor questionnaires and new vendor RFI and RFP questions. IT departments must start requiring this of all software vendors where company data is going to be stored and needs to be retrieved.

The Three Security Attack Surfaces: Protecting Corporate Context

Centralized context management introduces security risks that must be managed across three critical attack surfaces:

Attack Surface 1: External Threats (Secure from Outside Compromise)

Centralized context is an attractive target for external attackers. A breach exposes comprehensive organizational knowledge.

Mitigation:

Encryption at rest and in transit

Multi-factor authentication for all access

Network isolation and DLP (Data Loss Prevention) controls

Regular security audits and penetration testing

Compliance with SOC 2, ISO 27001, or equivalent standards

Attack Surface 2: Internal Access Control (Ensuring Correct Visibility)

Not all employees should access all context. A sales employee shouldn't see HR salaries. A customer service agent shouldn't see executive strategy documents. An engineer shouldn't see customer financial data.

Mitigation:

Attribute-based access control (ABAC) — Fine-grained permissions at the data element level

Role-based governance — Departments and teams have defined access levels

AI agent governance — Agents inherit their operator's permissions; they can only access what their user is authorized to access

Audit logging — Every context access is logged, trackable, and auditable

Attack Surface 3: Model Training Data Leakage (Protect from LLM Training)

When AI agents interact with proprietary company context, there's a risk that sensitive information could inadvertently be included in model training data — creating long-term data exposure.

Mitigation:

Use models with data retention policies — Ensure API calls are not used for model training

Data handling transparency — Document exactly how each external AI service handles your data

Preference for self-hosted or private models — For highly sensitive context, consider models deployed on your own infrastructure

This governance belongs in Tier 4 — AI Governance policies define which models can access which context. Sensitive data is isolated to models with strong data protection policies

A Model for AI Context Management for Business

Company Context Management as Organizational Transformation

Implementing centralized Company Context Management is not a technology project. It's an organizational transformation.

FROM: Departments managing their own siloed documents in SharePoint, Confluence, or local drives. | TO: Subject Matter Experts and business leaders curating, publishing, and managing context as living organizational assets. |

FROM: IT managing data as an infrastructure concern. | TO: IT enabling and governing while business teams own context curation and lifecycle. |

FROM: Agentic AI operating blind, guessing at answers. | TO: Agentic AI operating with organizational intelligence, generating accurate, auditable outcomes. |

Organizations that embrace this transformation will scale agentic AI confidently. Those that treat context as "just another technology problem" will continue to experience AI failures.

This tier is the central nervous system connecting governance (Tier 4), knowledge (Tier 5), and models (Tier 3) into coordinated, multi-step workflows. It's where the transformation from human-centric to AI-centric operations takes place — and where humans evolve from doers to directors.

Company Process as Context: The Third Layer

Company Context includes three components:

Information Authorization (Tier 4) - Who has access to what internal information and data?

Knowledge (Tier 5) — Organizational facts, policies, and reference data

Data (Tier 5) — Structured business information accessed through governed APIs

Process (Tier 6) — How work gets done; the workflows that define decision logic, approval patterns, and task sequencing

Process context is uniquely positioned in Tier 6 because this is where the migration from human work to AI work occurs. Process designers, department leaders, and SMEs encode company workflows into agent meta-prompts:

Decision logic — "If prospect fit score > 80, auto-draft outreach. If < 60, escalate to human review."

Approval patterns — "All deals > $50K require manager approval before execution."

Escalation rules — "If agent confidence < 70%, escalate to SME for guidance."

Brand standards — "All outreach must use conversational tone, mention company value prop, include social proof."

When agents execute with embedded process context, they don't just follow generic logic — they follow your company's logic.

What It Encompasses

Agentic AI Capabilities — Agents that research, reason, decide, and take action across multi-step workflows (not just chat).

No-code agent builder — Visual workflow design enabling Subject Matter Experts and process designers to build sophisticated automation without coding.

Meta-prompt control — Department leaders and admins define how agents behave: tone, decision logic, approval thresholds, escalation rules. These meta-prompts embed company standards into agent decision-making.

Human-in-the-loop patterns — Agents pause for human approval at critical decision points rather than executing autonomously.

Prompt routing — Tasks are directed to the most appropriate model or agent based on context and requirements.

Department-level autonomy — Business teams design, manage, and refine agents without IT bottlenecks.

Why It's Strategic: From Doers to Directors

Traditional enterprise workflows are human-centric: employees execute tasks, make decisions, and take actions. AI orchestration inverts this model. Employees shift from doers to directors — defining outcomes, guiding agent behavior, and reviewing results.

The transformation:

Before: Sales rep researches prospects (2 hours) → drafts outreach (1 hour) → sends emails (30 min) → manually tracks responses → repeats weekly.

After: Sales rep defines criteria ("Find healthcare prospects, $10M+ revenue, Northeast") → agent orchestrates research, analyzes fit, drafts outreach → rep reviews recommendations → rep approves execution → rep monitors outcomes. Total active time: 15 minutes.

The work doesn't disappear. It scales. The rep now orchestrates 10 outreach campaigns per week instead of one. They operate at a higher level, making strategic decisions rather than executing tasks.

Meta-Prompt Control: Encoding Company Operations

Meta-prompts are the instructions that shape how agents behave. Unlike user prompts (which employees input for each task), meta-prompts are set once by process designers and enforce company standards across thousands of agent executions.

Example meta-prompt for a Sales prospecting agent:

"You are a sales prospecting agent. Your job is to identify qualified leads based on company fit and opportunity size. Use company knowledge to understand our ICP. Research prospects using external data and company CRM. Score prospects on fit (0-100). For scores > 80, draft personalized outreach using our brand voice. For scores 60-80, flag for human review. For scores < 60, reject. Always cite data sources. Escalate unusual cases to the assigned sales manager."

This meta-prompt ensures consistency: every prospect is evaluated by the same criteria, every outreach follows company brand standards, and escalation happens at the right threshold. Department leaders can adjust meta-prompts as strategy evolves; agents immediately reflect the change.

Empowering Distributed Process Ownership

Department leaders and Subject Matter Experts own process design within their domains:

Define agent workflows and decision logic

Set approval thresholds and escalation rules

Embed company standards into meta-prompts

Monitor agent performance and outcomes

Refine processes based on feedback and results

IT maintains governance and infrastructure; business teams own process strategy. This distributed model accelerates innovation and ensures agents reflect each department's unique requirements.

The Employee-Orchestrated Agent Operating Model

The future is neither fully autonomous AI nor human-only work. It's employees orchestrating AI agents:

Employee defines goal and criteria

Agent researches, analyzes, synthesizes

Agent presents recommendations with reasoning

Employee guides the agent ("Filter to Northeast," "Increase threshold")

Employee approves execution

Employee monitors outcomes

Feedback loop improves future recommendations

This cycle repeats thousands of times across departments. Employees operate at a higher level, making strategic decisions. Agents handle execution. Scale emerges without replacing humans.

Key Capabilities in an AI Orchestration Platform

When evaluating the AI Orchestration software for your organization, consider the following critical capabilities your should require of the vendor you ultimately select.

No-code agent builder — Visual workflow design for sophisticated automation

Agentic AI modes — Agents that research, reason, decide, and act

Meta-prompt control — Department leaders embed process and brand standards into agent behavior

Human-in-the-loop controls — Agents pause for approval at critical decision points

Department-level autonomy — Business teams design and manage agents without IT bottlenecks

Centralized visibility — All agents are visible to IT and admins with full audit capability

4. AI-DRIVEN COMPANY OPERATIONS

A Vision for AI Transformation Across Business Operations

This is where humans and AI collaborate daily — where the transformation becomes visible and measurable.

The layers we've described — governance, context management, process orchestration — all converge at the point of business operations. This is where AI transforms work and where organizations capture value. With the right stack in place, human staff and AI begin to collaborate daily, transformation becomes visible and measurable, and use cases begin to scale seamlessly across the business.

What It Encompasses

When your company’s infrastructure is re-oriented with the right AI stack, an AI-Driven Operation can carry the following characteristics:

Employees interact with AI assistants — Conversational interfaces become the primary interaction model for accessing company intelligence, executing processes, and orchestrating workflows.

Use cases are activated at scale — Department-specific AI agents (Sales AI, HR AI, Customer Success AI, Operations AI) are deployed across teams, enabling consistent, governed execution.

Human-in-the-loop workflows execute daily — Employees guide, approve, and refine agent recommendations in real-time.

Third-party integrations extend reach — AI orchestration connects to external systems (CRM, BI tools, ERP, etc…) and AI merges qualitative company knowledge with quantitative data.

Measurable business outcomes emerge — Productivity gains, cost reductions, quality improvements, and compliance assurance become visible and quantifiable.

Conversation as the Operating Model

In AI-native operations, conversation becomes the primary interface for work.

Instead of navigating dashboards, filling out forms, or clicking through menus, employees converse with AI assistants:

"Find qualified prospects in healthcare with $10M+ revenue in the Northeast"

"Draft a proposal for ABC Corp based on last quarter's engagement"

"Summarize this week's customer feedback and flag recurring issues”

"Update the benefits FAQ with the new PTO policy and propagate to all HR agents"

The assistant understands context, accesses governed knowledge and data, applies process logic, and either executes autonomously or presents recommendations for approval. The employee guides, refines, and approves. The cycle repeats.

This conversational operating model eliminates friction: no training on complex software, no navigating nested menus, no switching between tools. Employees ask, agents deliver, humans approve. Work scales.

Human-in-the-Loop: Humans as Decision Makers, Not Task Executors

AI-native operations is fundamentally human-centric. Humans don't disappear; they elevate.

The shift:

Traditional: Employee executes tasks (research 2 hours, analyze 1 hour, execute 30 min). Limited by human time.

AI-Native: Employee orchestrates AI (define goal 5 min, review recommendations 10 min, approve execution 5 min). Employee makes strategic decisions. AI handles execution. Scale emerges without replacement.

Humans remain in the loop at three critical moments:

Guidance — "Filter these results further," "Adjust the criteria," "Try a different approach"

Approval — "Yes, proceed," "No, escalate this," "Make this change first"

Monitoring — "Did this work as expected?", "What should we adjust next time?"

This human judgment is irreplaceable. AI handles execution reliability; humans handle strategic direction.

USE CASE IN MOTION

The Right People, Right Process, Right Time

AI-native operations means getting the right AI processes activated by the right people at the right time. This happens through structured conversation between employees and AI assistants.

For example: A sales leader asks their AI assistant: "Show me all prospects in healthcare with $10M+ revenue in the Northeast."

The AI Assistant:

Accesses governed context (Tier 5) — Pulls customer data, product information, company strategy from the centralized knowledge layer

Applies company process logic (Tier 6) — Uses embedded decision rules: qualification criteria, fit scoring, outreach standards

Orchestrates the workflow — Research → Analysis → Ranking → Draft outreach → Present recommendations

Pauses for human judgment — Presents findings and asks: "Does this look right?"

Executes upon approval — Sends outreach, logs interactions, and monitors outcomes

This single conversation scales to thousands of parallel workflows across the organization. Each department, each team, each employee orchestrates AI processes tailored to their domain.

From Silos to Intelligence: Organizational Transformation

The result of this vision is an organization where:

IT & Executive Leadership establish governance guardrails, then trust departments to innovate within those guardrails

Subject Matter Experts continuously shape organizational intelligence by curating and maintaining company knowledge

Process Designers encode company strategy and values into agent decision-making, standardizing how AI thinks about problems

Frontline employees orchestrate AI processes daily, operating at a higher strategic level, freed from execution burdens

Organization-wide — AI amplifies human capability at every level; work scales without proportional headcount growth

This is the competitive advantage: organizations that achieve this transformation operate with fundamentally different economics than those still executing tasks manually.

Organizational Operating Model

Just as the day-to-day tasks, responsibilities, and workflows have evolved from the pre-internet era to today, so will employee’s day-to-day work look different as the organization evolves in the AI era. The transformation requires clear distribution of responsibility across organizational layers. No single team owns AI operations; success requires coordination across governance, context, process, and execution. Consider the following, high level model of who in the organization is responsible for what types of work.

The result: Distributed ownership across four organizational layers. IT enables; SMEs inform; process designers guide; frontline workers execute with AI speed and accuracy.

Centralized AI Management: One Platform, Infinite Use Cases

The traditional enterprise approach to AI is fragmented: Sales buys its own AI tool, HR buys a different one, Customer Success deploys chatbots independently. The result is shadow sprawl, inconsistent outcomes, and governance chaos.

An AI-First Operation approaches things differently. AI operations are centralized through a unified platform that enables:

Unified governance — One set of policies, access controls, and compliance standards applied across all use cases and departments.

Shared Company Context — All agents access the same centrally managed knowledge and data (Tier 5), ensuring consistency and accuracy.

Reusable processes — Orchestration workflows built in one department can be adapted and reused by others (Tier 6), accelerating innovation and reducing redundancy.

Centralized visibility — IT and leadership have full transparency into all AI operations: which agents are running, who's using them, what outcomes they're delivering, and what compliance risks exist.

Single interface for employees — Rather than learning different tools for different use cases, employees interact with a unified AI assistant that adapts to their role, department, and task.

Result: Organizations scale AI faster, with lower risk, and higher ROI. Employees experience consistent, reliable AI across all workflows. IT maintains control without becoming a bottleneck.

Scaling Use Cases Across the Business

Tier 7 is where use case proliferation happens — but in a controlled, governed manner.

Phase 1: Pilot — A department builds an agent for a specific workflow (e.g., HR onboarding assistant). The agent operates with Tier 4 governance, accesses Tier 5 context, and executes Tier 6 orchestrated processes.

Phase 2: Refine — The department collects feedback, adjusts meta-prompts, improves knowledge sources, and refines workflows. The agent improves.

Phase 3: Scale — Once validated, the agent is rolled out to the entire HR team. Other departments observe the success and adapt the workflow for their needs (e.g., Sales adapts onboarding logic for customer onboarding).

Phase 4: Proliferate — The organization now has dozens of agents across departments, all governed by the same policies (Tier 4), accessing the same centrally managed context (Tier 5), and orchestrated through reusable processes (Tier 6). IT maintains visibility and control; departments maintain autonomy and innovation.

This is AI-native operations at scale: distributed use case development within centralized governance.

The vision is not autonomous AI making decisions independently.

It's operationalized AI where the right people activate the right AI processes at the right time, guided by human-in-the-loop oversight.

ABOUT SAIRIS

Sairis enables enterprises to operationalize AI across their entire workforce with unified governance, knowledge management, and agent orchestration. Organizations use Sairis to eliminate shadow AI sprawl, centralize company context across all AI operations, and execute scalable AI workflows with comprehensive oversight. Founded in 2024 and headquartered near Denver, Colorado, Sairis’s platform has addressed the AI operational gap in deployments across diverse industries and organizational scales—from Fortune 500 enterprises to small and medium-sized businesses, spanning mining, manufacturing, retail, pharmaceuticals, and professional sports, including NFL teams. This cross-industry experience shapes Sairis' approach to solving the AI governance gap, context blindness, and operational misalignment that prevent most enterprises from realizing AI value at scale.

Learn more at Sairis.ai

Sources & Additional Research

Accenture. "The Front-Runners' Guide to Scaling AI." 2025. https://www.accenture.com/content/dam/accenture/final/accenture-com/document-3/Accenture-Front-Runners-Guide-Scaling-AI-2025-POV.pdf

Bain & Company. "Scaling AI to Transform the Enterprise." https://www.bain.com/insights/scaling-ai-to-transform-the-enterprise/

Boston Consulting Group. "Artificial Intelligence at Scale." https://www.bcg.com/capabilities/artificial-intelligence

Capgemini Research Institute. "AI-Powered Enterprise: Unlocking Value at Scale." Global AI Research Report. https://www.capgemini.com/insights/research-library/the-ai-powered-enterprise/

Cornell University. "Organizational Readiness for AI: A Framework for Enterprise Transformation." https://www.researchgate.net/publication/370704102_Organizational_Readiness_for_Artificial_Intelligence_Adoption

Deloitte. "The Rise of AI Agents and Collaborative Automation." https://www.deloitte.com/us/en/what-we-do/capabilities/applied-artificial-intelligence/articles/ai-agents-in-collaborative-automation.html

Economist Impact. "Outpaced by Innovation: The Growing Gap in Enterprise AI Adoption." https://impact.economist.com/new-globalisation/outpaced-by-innovation

Forbes. Hill, Andrea. "Why 95% Of AI Pilots Fail, And What Business Leaders Should Do Instead." August 21, 2025. https://www.forbes.com/sites/andreahill/2025/08/21/why-95-of-ai-pilots-fail-and-what-business-leaders-should-do-instead/

Forrester Research. "The Total Economic Impact of Enterprise AI Platforms." Technology Economic Impact Study, 2024. https://tei.forrester.com/go/Microsoft/AzureOpenAIService/?lang=en-us

Gartner Research. "AI Maturity Matters: Top Barriers in AI Implementation." June 12, 2025. https://www.gartner.com/en/documents/6587802

Gartner Research. "Predicts 2025: The Data and Analytics Governance Reset Continues With AI." https://www.gartner.com/en/documents/6794534

Harvard Business Review. Kenny, Graham, and Kim Oosthuizen. "Don't Let AI Reinforce Organizational Silos." September 2025. https://hbr.org/2025/09/dont-let-ai-reinforce-organizational-silos

Harvard Business Review. "Overcoming the Organizational Barriers to AI Adoption." November 2025. https://hbr.org/2025/11/overcoming-the-organizational-barriers-to-ai-adoption

IDC FutureScape. "Worldwide Artificial Intelligence and Automation 2025 Predictions." Market Intelligence Report, 2024. https://my.idc.com/getdoc.jsp?containerId=US51666724&pageType=PRINTFRIENDLY

IDC Study. "Unlock the Future of AI: Key Predictions for 2025 and Beyond." https://info.idc.com/futurescape-generative-ai-2025-predictions.html

KPMG. "Trust, Attitudes and Use of Artificial Intelligence: A Global Study 2025." https://kpmg.com/xx/en/our-insights/ai-and-technology/trust-attitudes-and-use-of-ai.html

Markovic, Dejan. "AI Trends for 2025: Enterprise Adoption Challenges & Solutions." Medium. https://medium.com/@dejanmarkovic_53716/ai-trends-for-2025-enterprise-adoption-challenges-solutions-e4ee075788dd

McKinsey & Company. "The State of AI in 2025: Agents, Innovation, and Transformation." https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

MIT CISR. "Grow Enterprise AI Maturity for Bottom-Line Impact." August 21, 2025. https://cisr.mit.edu/publication/2025_0801_EnterpriseAIMaturityUpdate_WoernerSebastianWeillKaganer

MIT Project NANDA. "State of AI in Business 2025: The GenAI Divide." July 2025. https://nanda.media.mit.edu/?utm_source=the+new+stack&utm_medium=referral&utm_content=inline-mention&utm_campaign=tns+platform

MIT Sloan. "Special Report: MIT Sloan Insights for Success in AI-Driven Organizations." https://mitsloan.mit.edu/press/special-report-mit-sloan-insights-success-ai-driven-organizations

Northwestern Kellogg. "Artificial Intelligence at Work: An Integrative Perspective on the Impact of AI on Workplace Inequality." https://www.kellogg.northwestern.edu/academics-research/research/detail/2025/artificial-intelligence-at-work-an-integrative-perspective-on-the/

Oxford. "The AI Strategy Compass: A Human-Centred Framework for Institutional AI Change." September 17, 2025. https://aieou.web.ox.ac.uk/article/ai-strategy-compass-human-centred-framework-institutional-ai-change

PwC. "2025 AI Business Predictions." https://www.pwc.com/us/en/tech-effect/ai-analytics/ai-predictions.html

Stanford HAI. "The 2025 AI Index Report." 2025. https://hai.stanford.edu/ai-index/2025-ai-index-report

World Economic Forum. "Future of Jobs Report 2025." https://www.weforum.org/publications/the-future-of-jobs-report-2025/